Tasmota and Autonomous Turn-off Thoughts

Right now I’m in the process of review writing some of which can’t be released until product launch dates – meantime – one of the main features of the free, open-source Tasmota device control firmware is it’s ability to control various devices and monitor various sensors internally without resorting to “the cloud” – or “someone else’s server” for the cynical.

Tasmota comes in rather handy if you don’t 100% trust your external provider Internet connection (something I’ve come to realise is very sensible here in rural Spain).

So while I like to use Google Home and Alexa, it has become blindingly obvious that they are both useless if you lose your external connection. Hence my home control is primarily internal (with VPN access for external control) – I do use the odd cloud-based device but mostly I use Node-Red on a (UPS-supported) Raspberry Pi to control Tasmota-driven devices and some Zigbee by using a Sonoff Zigbee dongle (was the ZBDongle-P – now upgraded to the ZBDongle-E) and Zigbee2MQTT on the RPi.

That all works well but yesterday I started to realise that one of my routers had failed – this router uniquely sits in a box in our pergola, powered by solar-charged batteries along with various external cameras and outside lights.

While it will always be the case that if the external Internet access fails, I’ll have no way to control lights etc. externally, this is the first time I’ve been unable to control them internally… and it occurred to me this morning that while I really need the extra power of Node-Red to control when and how various devices turn ON (and subsequently off), it would not hurt to use a Tasmota rule or timer to turn individual items OFF somewhat after the designated off time… for example – my lights generally come on at light-up time and off perhaps 11:30pm depending on the time of year – with some staying on or off when we’re on holiday – or on certain days etc… and I have manual overrides and groupings which start to stretch what Tasmota could do on it’s own.

HOWEVER, by adding a rule or timer to turn said devices OFF at, for example midnight, then even IF the WiFi went dead in my absense, at least I’d not end up with an extended electricity bill as Tasmota would completely locally ensure lights and other appliances were not left on un-necessarily – I’ve been known to be out of the house for weeks and it isn’t unheard-of to lose WiFi access internally though I’ve absolutely minimised the possibility by various means.

In this instance I failed at first to grasp that the pergola router had gone down because a solar battery issue meant the batteries died hours after turning on the external lighting but before lights out time. A few times recently we’ve returned home late from the pub to find external lights flashing on and off rather embarrasingly – only to now realise that the pergola power had died earlier before turning off the lights and as the batteries tried to recover – for a few seconds there would be power to IOT-controlled lights but not enough time for the external router to initialise and get the possibly missed once-a-minute-repeated OFF command from Node-Red back in the house.

This new (for me) local OFF override idea using a Tasmota timer will hopefully prevent such events happening in the future.

Solar Problems

I’ve been using these solar orange flicker-LEDs for some time now after initially purchasing the mains-powered versions (never had any issue with the latter)

You probably know the type I’m working on.. the bulb section can be over 20cm long and 10cm diameter

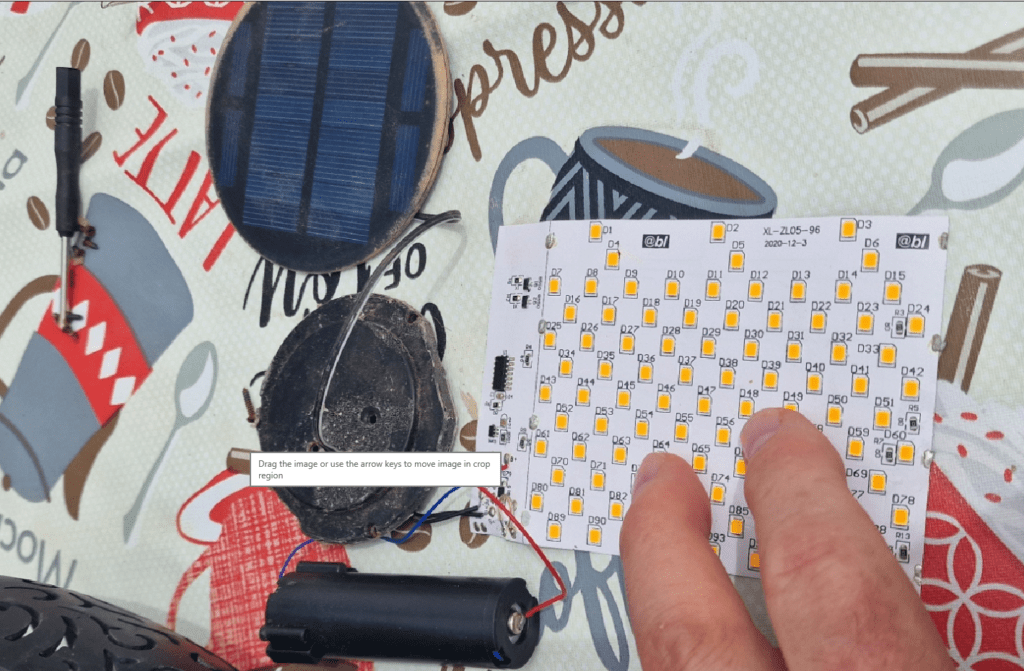

As you can see in the image above, I’ve opened this one up and carefully unsoldered the flexible PCB containing 96 LEDs,the circuit and showing the (in this case) standard 18650 battery and solar panel. I checked the battery – 4.2v – which means it has been sitting outside charging every day. I removed the on-off switch ages ago (and shorted out the wires) – they’re always a source of problems (damp, thin wires)…

After many months this lamp has simply stopped, it does not light up at all (two of them have done the same). Connections to the panel from the solar panel and battery could not be easier hence my confidence that the batteries and panels are both fine. I looked VERY carefully at the PCB, no obvious dry joints or dislodged components, no signs of burning.. I even checked the voltage on the PCB from both solar and battery – fine – the solar voltage being slightly higher than the battery voltage.

Not that long ago these lamps were €9 from AliExpress and stores like B&M in the UK. I was about to bin these two when I realised that Ali now want €20 each – whereas Amazon – well, lets not even go there.

Ultimately I gave up (I HATE being defeated) but if anyone has come across the same issue – feel free to comment. It is hard to fight the (admittedly OTT) idea of planned obsolescence with Chinese solar lights – they SHOULD be good for decades but never are. I have 3 larg stainless+glass solar lights purchased from the now-defunct MAPLIN store over a decade ago, these had stood the test of time – but at the some were on special offer at £6 each so likely twice that amount normally (sadly no longer available) but typical solar light life-times today are a fraction of that.

RPi5 WIN

I couldn’t really justify a full blown entry for each of these headings – but anyone upgrading from RPi4 32 bit Bullseye to RPi5 64 bit Bookworm, may, like me depend heavily on RPI-CLONE. I’ve talked about it a lot in here – meanwhile a pal of mine is busy convincing me that if I don’t keep up to date I could end up with dead code… so – my current home control was until yesterday (update April 15, 2024) RPi4 with MQTT, Node-Red, Zigbee2MQTT and SO much more – all put together on an even earlier Pi OS and earlier RPi, thanks to THE SCRIPT which you’ll see in the blog – with help from others I developed all of this – The Script and my home control setup over a very long time.

So my pal Antonio said I should consider – despite the work, upgrading to RPI5 with the latest Bookworm 64 bit OS – faster, better etc – and it IS faster. I bought an RPi5, loaded 64 bit Bookworm onto it and Antonio started to put together some DOCKER images for Node-Red, MQTT etc etc.. all GREAT – but for one thing. We did this on an SD and I put my usual SSD onto the RPi with a USB adaptor. We got the VERY basics up and running – then hit a BIG SNAG.

The original RPi-Clone no longer works on this new setup – RPi5 and the new Bookworm… worse – BillW who developed it hasn’t updated it for some time. I went off to two other repositories – guys who had started working on updates of rpi-clone. Their ISSUES sections were both CHOCK-FULL of comments – by the time I was finished I could not make head-nor-tail of which one if any worked – so I asked questions and ended up with this simple install:

curl https://raw.githubusercontent.com/geerlingguy/rpi-clone/master/install | sudo bash

All very nice but it would not work – I spent DAYS on it – conflicting comments about UUIDs possibly not matching and all sorts of potential issues – I was about to throw in the towel even with the install above – my SSD clone simply would not boot (dead- nothing but 2 solid lights) – when another fellow suggested it might help if I attached a monitor to the RPI5 (something I never do as I use Mobaxterm on the PC to access my RPis by SSH). I did the clone from SD to SSD, powered down the RPi5, removed the SD and powered back up with the monitor connected. Lo and behold – a message popped up on-screen – boot failure – your power supply isn’t powerful enough. I was using an official 4A 5V RPi supply – but the message insisted that with USB boot I need 5A – never seen that before. The same message also told me how to get around this by making a change to config.txt (SSD mounted on my PC)… a simple addition anywhere in that file – add this line:

usb_max_current_enable=1

With that, I put the SSD back onto the RPi5 and powered up – perfect. I tried the new version of rpi-clone again to create an SD from the SSD – no problem – even my old shortcuts I’ve referred to in the blog work perfectly.

Now that I can easily (one-liner) clone my work just as before – I can calmly get on with the task of ripping out and checking many thousands of lines of old code – but at least now I can do that safe in the knowledge that I can make backup clones at any time. I’m very happy.

Indeed, almost everything is now working on the new RPi5 setup in Docker including my old ESP-GO-based controllers (I developed that extensive ESP8266 firmware long before Tasmota appeared on the scene – it works well but the web interface leaves something to be desired and I can’t compete with the army of support that Tasmota attracts, so today the latter is my ESP8266/ESP32 firmware of choice).

I hope this helps someone else.

i added a menu to manage all this stuff, actions menu once selected a service has just the actions based on the container status (on main menu, an R means container is running): so you can’t run start on an already started container, or logs or bash on a stopped one

go inside the DockerIOT folder via shell, then run:

wget https://raw.githubusercontent.com/fragolinux/DockerIOT/main/iotmenu.sh

to run it: “bash iotmenu.sh”

take a look at the bottom of main page, i added a bunch of useful aliases, too, but all of them can now be used via this interactive menu

https://github.com/fragolinux/DockerIOT#useful-aliases

Hi Pete,

Glad I found out that the version of rpi-clone that I was using was no longer maintained!

I thought it might be worth mentioning that a note in https://forums.raspberrypi.com/viewtopic.php?t=367178 points to a useful intro page here:- https://rpi-clone.jeffgeerling.com/

B.

actually is already in the last part of the article, as a oneliner to be run 🙂

I know. I just thought it might be useful for casual readers to get to the start / info page before jumping into installation.

The link given performs an installation from a github-related url; If you were to assume that https://github.com/geerlingguy points to the repository from that, you’d be wrong; even though the repository actually is at https://github.com/geerlingguy/rpi-clone, you only find that out by going to https://rpi-clone.jeffgeerling.com/

Strange, but true. So, I just thought it would be helpful to include it.

you’re right, well done… and Jeff is a nice guy, follow him on youtube, lots of good info 🙂

John – Antonio – it isn’t as simply as importing nod-red flows into a new system or I’d have done that ages ago. In my case the various flows erly on lots of external utilities and services – some of which I’ve not touched in years as everything just works. Without making sure all those utilities and services are in place befor importing the flows, you end up with a shed-load of errors in Node-Red which can be very confusing. Don’t minimise the task of upgrading – especially for someone who is not a full time tech 🙂

i know your environment, i was just doing it easier for our fellow reader 🙂

that does not change the fact that your setup is actually today unmanageable or upgradable, so, sooner or later, you’ll need to start over, and as your setup is complicated (i know, we’re friends from how long now? a bit less than 10 years i think), you’ll need to check and migrate, flow by flow from actual setup to a new one…

and it would not be a smart decision to move again to an ad hoc “tailored” setup on a single system, this is way i’m spending my time in doing the docker setup, which will allow you (and others) to choose where to run this, even in the future…

i could spend my free time better, if nobody is interested in this, of course 🙂

Just like you Pete I’ve dipped my toe into the upgrade Bookworm/Docker and each time I find an insurmoutable hurdle (for me anyway) and had to back off.

I’ve also fought with the RPI-clone and then discovered that I could VNC and use the native copy. Then mpg123 would no longer play ball etc etc.

Anyway maybe I’ll have another go and treat myself to a nice new PI5

I’ve now gotten as far as having Node-red and MQTT runningon the RPi – thanks to Antonio. I’ve just spent the night making sure all the nodes are on RPI5… but as for my flows – so much configuration to do – subflows, it’ll be a while before I can actually DO anything with this – manwhile the house continues to run on RPi4, thank heavens I sorted rpi-clone (an updated compatible version) out (I’ve detailed in the blog what the issue was). So, copy the nodes across – update/upgrade, clone.. and from here on it will be baby steps with clones several times a day.. simply cannot chance losing my work – heating, lighting, washing machines, pergola controls – I’m not sure anyone but me has any idea how much my home relies on this setup – but at least with working rpi-clone – I can experiment save in the knowledge I can always go back a step. Could not contemplate the change without it.

ok, but that’s not related to docker or anything else: that’s something you should have put on the plate, as a natural evolution of your setup…

this is why i’m pushing you and anybody else to move away from a local setup as it was previously, with “the script”: that’s an old way to do stuff, it made sense at its time, not today, as it binds you to a specific machine (in your case by the 32bit os you were using) and os version…

moving flows 1 by 1 will allow you to clean up old stuff not needed anymore, or redo something in a different way, in case…

or, you can just stick with your actual setup, which works, but don’t pretend to update it beyond a given level or time, because sooner or later it WILL brake, with some update, or because you need a newer component not installable on that old OS… anything in python < 3.10 is going to die soon, just saying…

and you can always use rpi-clone (given somebody fixes it on newer hardware, as Jeff seems did) as before… but start getting rid of all those cli commands, not needed anymore, like the node-red start, stop, etc, most of them are useless in a containerized setup, or just made their time…

i go in cli RARELY, on my dockerized systems, these days, so no need of fancy prompts or what else, the maintainance is minimal and can be done in a more “spartan” way, without wasting time…

and in a “spring cleanup” time, i would have not installed EVERY node you had on your previous setup, as probably some of them are not needed anymore… you’re just getting nodered (re)start slower without real advantage…

i prefer to move the flows 1 by 1, check if missing nodes, add them as i need them, reconfigure them, getting rid of the unused or useless ones… i mean, probably you still have nodes to access local rpi gpio, why migrate them? They’re even deprecated, there are newer ones, now…

Not the first time I’ve tried this – the last time – NR just kept falling over as I didn’t nave the relevant nodes installed – I had to give up – this way at least missing nodes won’t be the reason – and the ones I installed, NR says thy were in use by at least one flow. Credentials will be the next hurdle.

ok, understood, it could be easier this way for you with so many flows, indeed

I will get there…. as long as I’m confident having a working backup – and you don;t have a heart attack … and of course I have to keep notes of where zigbee2mqtt’s data folder is – no longer in opt/zibbee2mqtt…. etc etc…. you are right about the pi-specific nodes though, never use those now….

no need to know folders, use native export import functionalities of any software, z2m should have one (i migrated from zha to z2m and back to zha a few times)

and btw, all folders are in a single point, all under /root/DockerIOT/servicename

again, if anyone wants to contribute a bit so i could get an RPi5, and continue porting and maintaining “the script” (but in this newer edition, so more portable to future devices and easier to maintain, adding back menu to select each option and other customizations, and backup/restore, too), feel free to make a little donation on my paypal:

fragolino (AT) gmail.com (or just @fragolinux on paypal itself)

much appreciated: as i said, i can’t test anything without having an actual device on which to test, and not interested in buying one myself because i use a completely different setup, now, and an rpi5 with 8gb ram is about 100€ right now on amazon.it

I thought of getting the 8GB but as I’ve managed with 2GB on rPi4, it seems rpi5 with 4GB should be fine. With all nodes in but no flows yet, RPi5 (my cls command inc free mem etc) says 3GB out of 4.24GB free.

ram management in linux is VERY different from windows… linux allocates as much ram as it wants, real usage is way below that, it can remove stuff from ram if needed, otherwise it’s optimized to use it to speed up things… i had home assistant with nodered working on a nanopi with just 512mb ram…

Hi.. referring to DrFraggle github.

I’m in Petes’s camp with all the usual node-red etc stuff and keen to migrate to docker. On github you have “easy install: just run a script to select what you want to install, choose your credentials, let it run”

There are all the folders for mosquitto etc, do I copy them to RPI ? and where. So far I’ve got docker installed on a clean “Bookworm”. Your install script didn’t work for me so I just went with “curl -sSL https://get.docker.com | sh”

sorry, main readme is not updated, i’ll do ASAP, my bad… it was written years ago, then as Pete was not interested, i did not update it, till recently…

yes, docker is installed as you did, or it should already be in bookworm repositories, probably these days

remember to add docker-compose, too: run the last bits on that readme to install the newer version, then add to your .bashrc this alias:

alias docker-compose=”docker compose”

or you can just use “docker compose” everywhere you see “docker-compose” (now compose is a plugin of docker itself, before it was a separate command, which is not updated anymore, so better go with the new syntax, but the alias will take care of the old one, in case)

to setup my environment, do this:

sudo -s -H

cd

git clone https://github.com/fragolinux/DockerIOT.git

cd DockerIOT

then please follow the readmes which you’ll find in each of the services directories to bring them up… each of them will have ALL its configs and files in their own directories

thanks for that but I’m lost here and will wait for your write up.

pi@Fraggle:~ $ sudo -s -H

root@Fraggle:/home/pi# cd

root@Fraggle:~# git clone https://github.com/fragolinux/DockerIOT.git

Cloning into ‘DockerIOT’…

remote: Enumerating objects: 272, done.

remote: Counting objects: 100% (125/125), done.

remote: Compressing objects: 100% (89/89), done.

remote: Total 272 (delta 45), reused 107 (delta 27), pack-reused 147

Receiving objects: 100% (272/272), 150.15 KiB | 2.31 MiB/s, done.

Resolving deltas: 100% (101/101), done.

root@Fraggle:~# cd DockerIOT

then I looked at readme for node-red install

root@Fraggle:~/DockerIOT# chown -R 1000:1000 data

chown: cannot access ‘data’: No such file or directory

damn… create the folder before, please…

mkdir data

then again the chown… i’ll update the readme, was written on the rush while we were testing with Pete

i added the data folder inside the nodered one to the repo, so whoever will use it after, will have it already there

oh, if you already have my DockerIOT, and want to get newer additions in the future, just go in there and run:

git fetch

git pull

Apologies as I’m not great at this. Having created the directory “data”, where is it? I’m searching around in WinSCP and there no folders in the docker directory apart from cli-plugins. Do I need to copy folders over.

you should have been inside $HOME/DockerIOT/nodered folder when you created the “data” new one

Absolutely confused. I can “ls” and see stuff in terminal but nothing shows on WinSCP. Is this some sort of virtual setup. It’s probably best for me to start from scratch with docker.

thanks for your help.

sorry, i don’t understand… what did you expect to find there? Of course it’s empty, it’s a bare new setup, just containerized instead of running all over your host filesystem, as in The Script “flavour”…

of course you need to start from scratch, migrating your old setup, piece by piece, as it will have to do Pete himself, and this is independent from the way the services are brought up: you’re migrating from an old system, Pete from a 32bit one…

nodered, just run it empty with this new way, then export your old flows and import them here, that’s the correct way to do it, not “copying” files around…

I’ll make a new comment here as this indenting is likely getting awkward by now.

This is a brand new build and I do intend to start from scratch (not copying files). It’s just that after following your instructions for ownership and node red etc, there are no new files/folders/containers on my PI. It was as if I had been working in a virtual environment. Anyway I’ll do some more research. Thank you again.

because the files are under /root and not under /home/pi 🙂

if you look at my comment where i told you to do sudo, and git clone, between there’s a “cd”, which brings you inside the running user home directory, which, because of the previous sudo line, is root 🙂

you need to allow ssh login as root to go in that folder with winscp, usually this is not allowed

Antonio is a clever man and has been a good friend – but he makes the mistake we all do – I make the mistake of telling local villagers (seetinbg up a router is easy) – only to find they may not know the difference between WIFI and 4G etc). Antonio forgets I’m 70 and have a memory that is good for nothing. If I don’t blog it, I forget it 🙂

If you’re going down the Docker route I’d suggest checking out IOTStack. It’s a “system” for installing loads of containers for home automation, including the ones you’ve mentioned.

It keeps the data in specific folders, so you just back up the data rather than then entire Pi. Can be rebuilt on a new Pi quickly.

https://github.com/SensorsIot/IOTstack

i agree, that’s the way to go if you need all that stuff…

i created this repo below before that Andreas’ one, and i put it aside when i saw his repo… i recently updated mine just to play with Peter (he didn’t want to go full docker nor use that bigger setup), and added just the bits he asked, once he needed them… everything is local in a single folder even with my setup, of course, easy to backup, but i plan to add a proper backup and restore feature soon…

the good of a docker setup is that you can move from rpi to x86 pc or vm, pretty much flawlessly… a minipc, way more powerful of any rpi out there, is cheaper than a full rpi setup (rpi, psu, case, microsd, ssd, adapters, etc)

my WIP setup: https://github.com/fragolinux/DockerIOT

I’ve got my older pc running dietpi x86 on VMWare and under that Portainer running docker-ESPHome. Just like managing a pi sysstem!

yup, i used docker-compose files, indeed 1 per each service (each in a separate folder, so you can bring up just the ones you need)

each folder contains a readme with additional info to customize each service (some requires a password, some the device to be mounted for zigbee2mqtt, etc), we tested everything on Pete’s rpi5 with success

i think the problem with rpi-clone is just about an “old” way to deal with these things… it has always been a precarious setup (it does not use snapshots like in modern filesystems, but copies live, changing, files, on the fly…): the fact it works does not mean it’s perfect: sh1t happens, and eventually will, when someday you copy a big file while it’s in use, and you get a corrupted backup with a false sense of “rightness”…

but i understand that in Pete’s view, this is more than enough and saved his a$$ many times… i don’t use “the script” anymore (i don’t even have an rpi5 to test it, nor plan to buy it… but if someone wants to contribute a bit to let me have one, even a little by many people, happy to be back on developing), as i think a dockerized setup is WAY better, these days…

with docker you can have a setup which can be easily moved even between completely different hardware architectures (given the docker images exist: each one i used in my setup are both x86 and arm64), by just copying over the folder with all the services configs and data…

if Pete wants to go on this way, i’ll manage to add proper backups (with a script to have them copied elsewhere) and restore, not complicated at all (we did yesterday on the fly with just tar…), but in the end, he HAS to move away from his running “production” setup, as it’s still based on a 32bit rpi, fully local, setup…

so, given he has to someway copy over his nodered flows to a new setup, why STILL go in a local setup which cannot be managed, cannot be VERSIONED, and needs attention every time a major OS update is out… with docker, you need 0 requirements on the host itself, aside docker itself: the os becomes an “utility” or facility, stop… all the other stuff is fully segregated in a folder, with its own data and configs (not messed around a full filesystem, like now), and it’s fully portable to a different machine or arch, by just copying the folders…

you don’t need to deal with php/npm/whatever versions: just find a docker image that has what you need, in the version you need, and it will be ALWAYS the same, VERSIONED and sealed…

and no need to deal with rpi-clone anymore: in case something brakes, just install a bare minimal OS, docker, docker-compose, copy back the folder, and bring up again all your services, exactly where they were…

Interesting! As a non-unix person (I spent 20 years with Dec vax mini/mainframes) I’d be interested to know what you use as a backup solution. I use rpi-clone too, but thought the utilities that it used were smart enough to copy safely. Otherwise, could you point me to the right places?

Thanks!

my setup is FULLY inside home assistant, so i have mosquitto, nodered and whatever as its own internal addons… then i added the gdrive backup addon, so i have now 1 week of daily backups locally, and 15 on gdrive, all automatic… i have even a normal cronjob that copies over via scp the local backups on a network share, so this is more than enough for my needs…

2 months ago my intel nuc died, i bought a new minipc (n95 with 16gb ram and 500gb ssd) on which i installed proxmox, then used this script: https://github.com/tteck/Proxmox/blob/main/vm/haos-vm.sh to create a new HAOS vm, once it booted i just pointed to my previous network share, and selected my last previous backup file, everything was restored with 0 issues, after i passed through the host bluetooth adapter to the vm, and the same for the itead zigbee dongle-e usb controller

in half an hour i had everything installed on bare metal with proxmox, and in vm with home assistant… having it as a vm allows me to even create proxmox backups, or snapshots just before a major update, os if something goes wrong, i can go back to previous state in just seconds…

I’m glad HA is now supporting backup with containers, but I don’t have everything inside HA. I have a separate pi4 for most of the NR automation because a single pi4 wouldn’t hack it; so I need a backup solution for that and the non-HA separate systems, including 2 pi-hole machines.

Any suggestions?

Thanks!

what are pi-hole machines? virtual machines? containers? what?

if containers, take a look at my backup script in the root of my github repo, or on github is full of other solutions and scripts

I have 5 boxes running Dietpi.

2 x Pi4 (see attached for details)

2 x Pine 64, one running zigbee2MQTT in a container, the other running Pi-hole installed (couldn’t get it to work properly in a container)

2 x Pi3 one running MQTT server, the other running Pi-Hole.

but, as said, if Pete wants to follow this new way, i could add a proper backup solution someway, just for that folder… there’s already a backup script inside my repo (you can go in each service folder and run it), but it creates a bigger, full backup, including the docker images… which is very good for people like me, who prefer to have control on which parts you restore, selectively

RPI-CLONE (and now the brought-up-to-date version) has served me well for years. If I’ve just made a few simplt changes, say in Node-Red – a complete rpi-clone to an already-used SD takes what, less than a minute…. if it took several steps or a long time I[‘d never have lasted with it…completely reliable – never lost anything… and that’s a clone – not a backup for I have to install operating system to use – complete clone – and size of SD or SSD doesn’t matter as long as it’s the same or larger than the original.

yup, but the main problem of your setup is what you’re dealing with right now: you’re bound to THAT installation, which is now old and still 32bit…

as you need to move away from that, better to go to a setup which is hardware and architecture agnostic… so, no more sw installed on the bare metal, all containerized, portable and manageable…

and rpi-clone would work in this setup, too, anyway, so best of 2 worlds… or at least, till you stay on rpi: i moved on pc virtual machines or containers years ago, never go back to these problems…

Erm, doesn’t a mini-PC use more power? and I maintain you can’t get any easier (or faster, usually) than rpi-clone – which of course only works on RPi. Never under-estimate people’s reluctance to do regualr backups unless they are VERY easy to go – and I mean idiot-easy. Without rpi-clone I would nevre have started to attempt this transfer to RPi-5 we’re playing with.

so if nobody would have taken care of updating rpi-clone to work on new OSes or devices, you would have stick to your old rpi4? till when? Till nodered or anything else would have needed an updated package that you couldn’t install on that old 32bit os… 🙂

minipc power, lower than an rpi5… mine consume about 6w, an rpi needs a 3A psu, at least to boot… power is comparable…

backup: snapshots and backup are a few seconds task in a virtualized setup… machine dies? New one bought, copied backups, restored, up and running, with a completely different cpu, ram, etc… don’t underestimate other platforms benefits just because you know just one 🙂

oh, with about 300€ i can have a full cluster of 3 minipcs, with vm and containers replicated both as storage and power, moving around vm and containers from one to the other if needed, or completely unattended if 1 node dies… do it with rpi 🙂

That being the csae – RPi people have lost the plot – the RPI was SUPPOSD to be cheap and low-power (remember the original ad – the $25 PC – or was that the $25 network – I did that too). They seem to have forgotten about all of that. And to control the home, it is largely my choice to keep updating – without doing any updates, just leaving it alone, most of it would just keep working. I just happen to be the type who always updates…. I’ve had dozens of mini-PCs as you know – and still have a spare – and also an Orange Pi and FA kit etc.

world is not the same as 10 years ago, chips are way more expensive cause to pandemic and wars, and material shortage…

and you’re not even using it for what it was born: intro to electronic and programming… they wanted to expand their market share, could you blame them?

But power of that little device, has been multiplied many times since the 1st model…

I’ve had great success with using Portainer as a front end to Docker. It has support for composer scripts (as stacks) plus the engineering is done by a commercial company who allow it to be used free for personal use.

I use Dietpi with its simple system of installing the few basics I need – portainer, docker, ssh – and all the rest is handled with portainer stacks.

WinSCP to look after the config areas so everything is managed from Windows.

portainer is in my setup, too, but just as an utility to easily manage containers, check logs, etc: i’m used to do this via cli, but i understand other people concerns with this…

but i suggest to avoid CREATING docker compose stacks with it: just use something like my setup, or Andreas’ one, so you can still MANAGE them via portainer, but they’re portable as fully isolated in a folder, while portainer native ones are in some sort of database, or the likes, not easily backuppable or movable on a new machine…

I really think you should investigate the latest incarnation of Home Assistant. You no longer need to use much in the way of esoteric yaml. There is a really good integration with Node Red and Tasmota. I have almost no need to use a cloud based control system for anything much. Anything crucial that I use Alexa for I also duplicate via remote control fobs and Sonoff RF bridges (eg home theatre on/off, swapping between kodi and firestick etc).

I started off using Node Red/ MQTT for home control and it shortly became unmanageable. I found I could replace great swathes of NR flows with much more simple HA nodes.

Just my 2p

B.

could not agree more… i tried a few times to bring in Peter to HA, these days it’s very easy to manage and powerful, even though i still prefer writing automations in nodered (it’s fully and completely integrated in HA and vice versa), they’re now much easier to deal with even in HA itself… dashboard are a breeze to be made (and with latest 2 HA releases, now you have full drag’n’drop support, too), no need of all that dns/ip mess that Pete sometimes have to deal with, integrations for everything already exist…

if pete wants, i’m here 🙂

Totally agree about NR for automations – yaml is horrible for that. That said, the latest versions of HA allow a prompt driven way to create simple automations (e.g. for scenes) that hide the yaml in the background.

Scenes are useful because they can be activated by Alexa.

Also the NR integration lets you detect which scenes have been activated, so it becomes possible to invoke NR routines from Alexa. Good stuff!

actually there’s no need to use yaml for automations in home assistant from years, now… all can be done via its web gui, steps can be ordered, put in AND or OR, etc…

and, for Pete: you can have HA side by side with nodered, moving stuff to HA a bit after the other…

the old stuff we had in “the script”, honestly, who uses them anymore? Direct gpio access? And anyway, that can be used by a docker setup, too, or you can just install local, ad hoc, sw to interact with those on the host machine…